The #innotribe group is now in full swing, moving between presentations and workshops, dialogues and debates, agreements and arguments, confusion and clarity …

... you get the idea, with innovation there is no certainty, just the uncertainty of the certain.

The uncertainty of the certain future that is.

As mentioned, we started the debate this morning on digital identities.

It’s a tough area to crack, as many have tried and failed, but SWIFT has been running research into how to crack this nut for over a year as a pivotal piece of the innotribe workstream.

This research will be published in the near future, and today was more about:

- how to create a trusted digital framework,

- what would comprise a digital certificate or token that was trusted,

- how would individuals use such tokens,

- what functionality would be needed,

- what are the attributes of trusted digital exchanges,

- how would SWIFT be involved, if at all,

- what is the nature of trust, etc, etc.

All ephemeral and esoteric questions, but fundamental things that need addressing, and have always needed addressing throughout time.

The fact that these things have always needed addressing but we have yet to address them, is the issue. Can a trusted digital framework ever be achieved I wonder?

We then moved on to look at BIG DATA and what all that means.

I’ve talked about big data a lot already, and this session was opened by Sean Park of the Park Paradigm and Anthemis.

Sean began by asking questions, such as: “What if you could walk into a store and they would know what you want to buy before you get there?” Scary.

He then outlined the life of a future where everything in your life has been captured, caught, every second and microsecond, in real-time.

The future human knows when they will expire by natural (or unnatural) causes, and every second and microsecond of future life is known with few unknowns, through data and metadata that is crunched and recrunched in real-time.

Scarier still.

The idea is to take big data and turn it into big information, and turn complex data into actionable information in real-time.

And the bank is central to being the cruncher of that data is the future dream (or nightmare).

McKinsey’s Michael Chui then piped up and outlined how data storage has grown over the years, with the average 1,000 employee bank holding almost 2,000 terabytes of data today. That’s ten times more than the US library of congress.

The efficiency gains of big data are key here, as it can crate transparency, expose variability, enable experimentation, provide segmentation to the point of one-to-one marketing, and more.

HP’s Larry Ryan was then on stage talking about big data, and pointed at various exmaples including my own personal favourite, Zynga.

Zynga’s “Empires and Allies” game grew from nothing to 30 million people playing in just 17 days. That goes with Farmville which went from no users to 60 million in 2009 and now Cityville goes from none to 100 million in just six weeks in early 2011.

That’s a firm really using big data analytics to gain traction and attraction.

Jeff Jonas of IBM followed, and introduced the idea of how big data is critically challenged because it needs context.

He illustrated this by saying imagine that you only receive one piece of data at a time.

It’s a jigsaw.

How do you relate the data to make sense of the data in context?

That’s the challenge of big data, which led us into a team debate where each person got a piece of the puzzle and had to work out everything they could about their piece before joining the others to relate them together.

This is where it gets interesting, as my light bulb suddenly switches on.

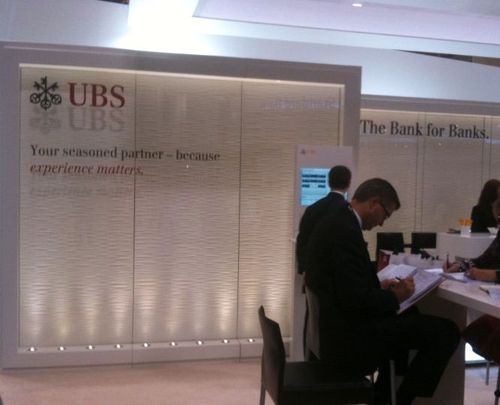

Imagine you’re the UBS trader Kweku Adoboli.

You work on a desk where your major instrument for looking at different trading scenarios is an Excel spreadsheet.

Now I’m not saying this is the case, but many organisations in the City use Excel spreadsheets for their trader’s portfolios.

These spreadsheets form pieces of puzzles that are distributed and decentralised.

In a world of exabytes, having microbits of data distributed and decentralised is an issue, because it’s the bit of a byte that can bite.

Bearing in mind that banks are just bits and bytes of data, having a bit of a byte that can bite in a petabyte or more of data is the reason why big data is a critical factor in the future of banking and risk.

As Werner Steinmuller said in the panel discussion this morning: “There is no risk free system, but we are trying to make it better”.

The problem may be this … you cannot have a risk free system where distributed data is allowed to be operated in an unregulated, unmanaged and individually risk fuelled way.

Lots of food for thought here …

… meantime, I’m off for another whirlwind tour of the exhibit floor and party time (yay).

Chris M Skinner

Chris Skinner is best known as an independent commentator on the financial markets through his blog, TheFinanser.com, as author of the bestselling book Digital Bank, and Chair of the European networking forum the Financial Services Club. He has been voted one of the most influential people in banking by The Financial Brand (as well as one of the best blogs), a FinTech Titan (Next Bank), one of the Fintech Leaders you need to follow (City AM, Deluxe and Jax Finance), as well as one of the Top 40 most influential people in financial technology by the Wall Street Journal's Financial News. To learn more click here...